Learning to work with large data sets is like growing up: you start with spreadsheets, with fragile functions that break on any change to the data. Then you begin to crawl and maybe learn VLookups to join data together. Your data might be a hundred rows in a spreadsheet and works just fine. Your spreadsheet might scale up to a few thousand rows but it’s a little slow.

Moving into larger data, you have hundreds of thousands of rows and it just won’t work. You might find some hacks, but you want to go faster. You’re past the crawl stage and walking but you see people running.

The runners are the programmers. They can move faster, scale, and push the limits while you’re stuck in Excel. You can go to places in twice, three times the speed but it means you need to be much more coordinated and agile. It’s a lot of work but you’re inspired!

You decide to take the leap with a couple of programming courses which—let’s be real—are boring. It’s like watching an instructional video of running. Yeah ... kind of get it conceptually but not really how you learn to run. You just need to do it.

You bust your knee a few times, definitely have a few bruises, but you’ve executed your first script and it freakin' worked! Screw those hundreds of thousands of rows, gimme millions! You start learning SQL and now hundreds of millions of rows are at your fingertips. You just learned to sprint. You just unlocked a world of new experiences, places and amazing adventures. It’s hard to think you couldn’t even walk.

When I first started, it was in Excel with a few rows of data. Right now, I’m running Apache Beam with Dataflow data pipelines that pull JSON API output files from Cloud Storage, spin up dozens of machines with workers that thatch together those JSON files and store them in BigQuery—all running on Cron jobs in App Engine.

Have no idea what that means? Ask me that six months ago and I would look at you like you had three heads. I didn’t get a computer science degree from Carnegie Mellon, nor do I have an advanced degree, mentor or formal programming education … I was a journalism major!

I’m not going to sugar coat it. The journey was hard, frustrating and had many times where I wanted to throw in the towel. I’m still challenged and don’t feel like I’m going to make it but guess what? I have and I will.

My boss recently asked me what I wish I would have known when I first started and I couldn’t get the idea out of my head. The main theme was the journey. A lot of people have the image of action-packed coding sessions … matrix-style 3D models flowing on 10 monitors. Yeah … more like getting an error in what looks like a 30-year-old DOS terminal then going to StackOverflow—a Q&A site for programmers. Copying the code in an answer, seeing if it works … rinse and repeat.

Below I’ve listed my long journey in programming that I wish I would have had when I first started adventures in data. There were a lot of failures, tons of stubbornness but eventually, I learned.

2005-07: What is this matrix of cells called Excel?

My girlfriend in college told me about Excel. She had a simple budget I duplicated and learned how to use the sum function and reference other cells. I thought it was pretty cool but somewhat uninteresting because I wasn’t that into budgets. That’s all I thought Excel was for: accountants. Suffice it to say, I wasn’t into it.

I dabbled in spreadsheets for minor things like doing quick calculations but nothing more complex than a budget. At some point I learned about Google Docs, which had the same functionality. Cool, I could save my data online. Next.

2008-09: Oh, so Excel can be used for more than just budgets

I landed my first career job out of school at a consulting startup. It was just the owner and I. We had a programmer that would help build PHP sites but I mainly wrote blog posts and did marketing campaigns. As a side gig, I was asked to build a financial model. Why? No idea why someone thought a journalism major with next to zero Excel skills or business background should build a financial model.

I dusted off the ol' Excel and went to town. I built it, but I don't believe they received funding. Hopefully it wasn’t because an investor saw my model.

2010-11: Back to budgets in Excel, but now we’re dealing with real money

After doing door-to-door sales following the consulting gig, I landed a job in search engine optimization (SEO). One of the beauties of the consulting startup is I had the opportunity to do everything from social media management to paid advertisements and SEO.

I was hired as an SEO for an education lead gen site. I was also elected to run $100K PPC spends when the only PPC person left the agency. Just like the financial modeling … probably had no business doing it because I had zero training aside from a little at the startup. But again, I did it and freakin' loved it! This is where my Excel skills picked up after a long hiatus.

Google and Bing’s bulk ad upload tools required Excel and clients required pivot charts for reporting. I learned not to stack tables and how to clean data.

At this point, I thought programming was for computer scientists—not something that could help me manipulate data.

2011-13: Let’s get real with Excel, wait ... that’s hard

The company I was working for said they had one job for the SEO department (which had 3 team members at the time) and we had to fight for the job. I felt pretty good about staying because I was the only person that knew the PPC accounts but then again, I didn’t want to work for someone that made employment a gladiatorial competition. I moved on and applied for a PPC position at Seer. I was super confused why they were asking about SEO during the interview but realized they wanted me for SEO not PPC. Interesting interview ... but they took me nonetheless.

Around six months into my tenure, I started doing much more in Excel. I learned about macros, which blew my mind. So much automation! But I realized it was brittle and would break the second the program wasn’t in the exact same state as when the macro was recorded.

Doesn’t matter, I’d learn Visual Basic (VB), the programming language powering Excel. Like I was always told, sign up for a class and make it so. Yeah, learning how to create a popup window didn’t help me. I couldn’t see how it connected to the data I wanted to manipulate so I gave up.

2013-2014: First foray and failure in programming

The SEO data I wanted was locked up on servers I had to access through Application Programming Interfaces (APIs). So I took a Codecademy course on PHP. I messed around with PHP on a personal website but the second I crashed the site for changing a line in the code, I said eff it. Never again. But here I was trying to learn it.

I created a couple of cool visualizations using PHP—pulling from SEO APIs—but I wanted to get into web scraping and it didn’t seem like PHP was the solution. At the time, Seer employed a data scientist that pulled reports for our Analytics team. He loved R but advised against it. He pushed me towards Python because it had many applications—from programming lights to creating websites to doing machine learning. He laughed when I asked him if I should use PHP to scrape websites then said to learn Python.

Just as with PHP, I signed up for Codecademy but didn’t care about simulating shopping carts to understand the utility of classes. Again, I couldn’t see the direct application to what I was doing. Eff that. I swore off programming. Too hard and not a great direct application to my day-to-day.

2015: Millions of rows on my laptop

I did everything I could not to program, like using the Query function in Google Docs (which coincidentally uses SQL-like syntax, which would help me out later), ImportData and ImportHTML. Outwit was my go-to for a long time because it was point and click. No programming involved. I loved it. But then I wanted to scrape one URL, take that data then scrape more data, and so on. Outwit didn’t have sufficient documentation and examples so I was stuck. Again.

I knew I had to just do it. I needed to learn Python but traditional education doesn’t work for me. I don’t care about how many oranges Suzie has or John’s family tree (used commonly in class inheritance programming examples).

Around this time, I was single and read about this guy in WIRED that took time off his doctoral thesis to scrape OkCupid to find dates. I read his book and guess what? He did it all in Python. I made the decision then: eff it, I’m gonna be that weird dude, too. I wasn’t matching with anyone when I answered honestly, so either I’m a unique flower or their algorithm wasn’t great at matching people I’d get along with. I made a bet that it was the latter. Attaching something I emotionally cared about (finding a partner) to something that’s super dry (programming) was the only way.

2016-17: Millions of rows, online!

I didn’t have mentors, take classes or do anything you’d normally do to learn something very complex. I literally just Googled everything. For about 2-3 years in your programming career, you’re probably not going to have a problem hundreds of other people haven’t already encountered.

It look 6-9 hours to even get Python running on my computer! I was completely lost and felt like an idiot. My initial questions on Stack Overflow were met with snarky, intimidating comments. I didn’t feel comfortable posting my questions because I didn’t want to be spoken down to and feel even dumber. I relied on other people’s "stupid questions.”

Once I had Python working, I needed to connect to the internet to scrape web pages. The requests library made that relatively painless. Once I had the data from the OkCupid webpage, I needed to extract data. I needed something to parse the web page so I could easily extract user info. I learned about BeautifulSoup, which parsed web pages easily. Eureka! I could download the data and save it to CSVs.

Now, I needed to log into the OkCupid app. I learned you could inspect the page as it loads to see what API calls are made and knew that I could store cookies in a requests session—i.e. cookies are attached to your browser when you’re logged into a site and I could simulate that with the requests library.

This is great and all, but it I want data from thousands of profiles without getting blocked out so I needed to do it slowly. I didn’t want to overload OkCupid’s servers with requests. That meant I needed to put my code on a server because it took too long to run on my computer. I would leave my computer running all night and the job wouldn’t be finished before I had to break the internet connection and go to work. Damn!

Enter: Servers. FML, I remembered the webserver I crashed when I had my PHP website and was scared to touch it again. Thankfully, Digital Ocean had some great tutorials. I spun up a webserver and made huge CSVs but ran out of memory when trying to process the data. Because I’m cheap and didn’t want to spend more money on my hobby, I learned SQL might handle the data a lot better.

Initially I started with SQLite, which is a simple file that allowed me to make SQL queries. I didn’t need to spin up a SQL server, I justed used it like a CSV and damn it was fast and I could do awesome queries. Remember when I mentioned the Query function in Google Docs? Yep, that definitely helped in learning SQL so it didn’t feel starting up another mountain.

A SQL server was a great place for storing profile data. The only problem was having the script run every night. All I had to do was execute the script on the server every morning and it would run while I was at work. But yeah ... a great programmer once told me the best developers are the laziest. So how do I have this script run every morning? Enter Cron jobs. Cron will execute server commands given a time interval. Now, I didn’t even need to be there. This was a game changer.

The nerd in me thought it was awesome having a scraper running while I slept but also a little weirded out about using for a dating site. But I focused on how to learn Python, server command lines, scraping, how website logins worked, Cron and SQL. Oh, and through my efforts, I had a girlfriend! Mission accomplished.

(And yes, I told her and everyone else I dated about it. Surprisingly, they didn’t run for the hills.)

If I can scrape web pages, communicate with APIs and store large datasets, I could build scalable tools for the team at work. I could start automating processes for the team. My god, this could be huge!

Our keyword research process was very manual and ripe for automating. I encountered something I’d never needed to do before: rate limiting. If you have 10,000 locations you want to lookup on Yelp, you don’t just send 10,000 requests at the same time. This looks similar to a DDOS attack and for smaller APIs it would overwhelm their servers and the API would fail. Most, if not all, APIs have the number of times you can ping them per second, minute, hour, day or month.

How do I tell the program to slow down? Well, that’s where adding a sleep function came in handy. The program would pause for one second after each request to ensure it didn’t get blocked. What if I had more than one tool user at the same time? Rate limiting would break. Damn.

After much Googling, I found I needed to learn Celery. Celery is a task queuing system that creates workers to pull and execute tasks.

With Celery, I was able to queue with millions of tasks from thousands of users and the workers would pause after each request. It was awesome! But I sucked at it and it broke over and over again. Story of my programming career.

While this was happening, I realized I needed a user interface so other team members could use it. A web framework built in Python was required. I tried Flask (Sentex was an incredible resource) but I heard Django was more robust and would scale up and had great security features.

Now I’m learnin Django, awesome. FML, what was an object-oriented mapping (ORM) database!? I didn’t know what an object was and now I’m looking at an object oriented database. Yeah, just pushed past that and kept going.

I pulled out some HTML and CSS skills I learned when making my bomb-ass MySpace profile in 2005 and created a user interface (UI). It was great because the folks over at Twitter created something called Bootstrap that had all of the CSS taken care of so my UI didn’t look like a high schoolers MySpace page.

I had to learn about authentication, adding data into an ORM database, launch Celery workers, Cron jobs, etc. Now it’s all coming together. The maintenance was tough so Seer allowed me to employ a freelance worker from the Ukraine who barely spoke English (communication was hard) and watched the same YouTube videos I did. No feedback on my code because it was similar to what he would have done. I look back in horror now.

2017-18: Let’s get smarter with machine learning

While all this was happening, I was a Team Lead and it was time that I put my programming and automation skills to work, full time. My role changed to Innovation Lead—a pretty nebulous title that meant I could work on research and finding new revenue opportunities for Seer. I did that with the automated keyword research, which netted a good amount of revenue, so what could I do if I just worked on these side projects?

Around this time, I read an article by WaitButWhy that changed my career trajectory. I knew some of the last jobs for humans would be that of a machine learning engineer so I signed up for Udacity’s Machine Learning Nanodegree. It was great because everything was in Python. There were several courses that were insanely math heavy and as a journalism major whose passion for math peaked in fifth grade, I definitely struggled. A lot. But there were forums and 1:1 mentoring which made all the difference.

Again, I’m not a big believer in formal education—especially when it comes to programming—but the nanodegree program was helpful as an overview of machine learning. I then applied those learnings to finding low quality sites our PPC team advertised on and mapping keywords to URLs for our SEO team.

2018-Present: Give me your terabytes!

The machine learning algorithms worked great for a few clients. Then Wil, the CEO of Seer, asked if we could add in all of our clients. We also hired an external dev shop, called Kinertia. They helped us move everything to Google Cloud Platform (GCP) and offered an insane amount of value.

I started thinking scale was going to be an issue. No longer could I throw my code on Digital Ocean because it’d run out of memory. This happened a lot when I automated keyword research for the SEO team. I just increased it every month and racked up pretty large bills.

The Analytics team went with BigQuery as their data warehouse. They also worked with a third party to shuttle Google Analytics data into BigQuery. Because our data was very custom, we (the devs, Wil, the founder and I) decided to go it alone. That meant we needed to learn how to scale data processing for gigabytes and terabytes.

I panicked. How the hell do I even start? Wil connected me to some of his contacts that work with really large data—like real-time querying petabytes of data. They introduced me to the concept of extract, transform, load (ETL). Essentially, it’s like trying to figure out which car you should take across the country. Probably don’t want a smartmCar because you can’t store all of your supplies, and a Hummer might be too expensive. A Prius or another economy car that’s going to be reliable would be a good choice. Same is true for ETL. You can do ETL in Excel. Most, if not all, people do this in PPC. They download the data from AdWords (extract), edit the data to create or modify campaigns (transform) and then push it back to AdWords (load).

Doing this for large data gets tricky because you can easily spend millions of dollars with enterprise databases like SAP, but we were on a budget. SAP would be like bringing a bazooka to a boxing match. Because we were already working with BigQuery on the Analytics side, I decided to take a Coursera specialization in Google Cloud Platform to get a lay of the land. It didn’t help with implementation as much as understanding which tools to leverage and when—called a data pipeline.

For instance, if I’m doing transactions—like archiving all tasks being sent to an API so I don’t call it more than once if the request was completed successfully—I’d use DataStore because it’s blazing fast. But if I want to store massive datasets and don’t care about fast responses, I’d use BigQuery. I also learned ML Engine could be a great tool for mapping keywords to the optimal page and classifying low quality sites. Task queues are great for the API rate limits because you can easily change the rate at which you execute tasks. App Engine was perfect for running Cron jobs and executing functions within the API.

We’re now using Apache Beam to do the ETL to extract raw files from Google Cloud Storage, joining that data together into a larger, aggregation table. We’re executing those Beam data pipelines in the cloud with Google’s Dataflow—which is amazing! The documentation for Beam is pretty sparse and I have relied mainly on a few Github publishers to learn about the advanced functionality.

Words of advice I wish I would have had when I started:

It’s hard, it sucks, it's unforgiving, but it's worth it:

Being able to code feels like a super power. With Google Cloud Platform, we’re spinning up 30 machines that transforms our data in 30 minutes for $1 when it would have cost hundreds on an expensive server. To me (a big nerd), that’s really cool. However, I know what it’s like to copy and paste and do that ETL manually so I can appreciate it more than your average person.

You don’t learn how to do that overnight and if you told me I’d have to endure the uphill battle for the last several years, I would have said hell no. Ain’t doing it. Take it one step at a time and don’t be too ambitious because you’ll scare yourself from even starting. Every programmer starts with “hello world.”Just Google “hello world” to get started. Don’t think about how hard it’s going to be to program your first Apple app in Swift before you know the basics.

Start with a passion project, not a course:

As creepy or nerdy it sounds for someone learning to code by using Python to scrape OkCupid … guess what? I’m running machine learning models, building apps and processing metric shit-tons of data in my sleep! The passion project could have been anything, but at the time I was looking for a girlfriend and it panned out. I started with how many oranges does Susie have and for whatever reason, just couldn’t get into it.

No one is going to learn it for you:

So much of our life is about buying success. How many people do you know who took a language course and became fluent vs. the number of people who learned by being immersed in a country where the language is native? It's much easier to become fluent when immersed.

I’ve had team members tell me to teach them coding and machine learning techniques. When I ask to see their code they look at me like I have three heads. “What do you mean? You are going to teach me.” Eff that. Effort in, effort out. I can’t learn or force you to learn it. It’s like when I was a kid. My parents bought me piano lessons for five years. I might have practiced a dozen times and eventually my piano teacher told me she was raising her rates and recommended I not continue pursuing piano. I was overjoyed! My parents brought me to the water but I wasn’t thirsty.

On the flip side, I’ll move pretty much anything from my calendar to review someone’s code. Nothing I love more than reviewing code with someone. I really wish I had found that person in the beginning. Meetups are great for that, especially because many of the people going are just getting into it. I’d recommend partnering with someone slightly ahead of you who can speak your language. An expert likely doesn’t remember what it’s like not to know. I run into this all the time with our devs. They’re incredibly smart and have that CompSci background, but it’s like explaining what the moon is to a dog sometimes. Some things I just leave on the table because I’m not there yet.

Know when to ask for help:

For very determined, type-A folks, it’s easy to just throw time at a problem. Wil has been integral at making me realize sometimes it’s working smarter than harder that’ll get better results in the end. I wish I had asked for help. Codementor is amazing because you can hire experts for 15 minutes. I had problems I worked on for days. Days! When I hired a mentor, they knocked it out in five minutes. Now, I can do that for team members, which is incredibly rewarding.

What now?

Two things:

- The learning should never stop

- Know when to crawl, walk and run

1) While I now know how to do some really cool, big data engineering work, I found out from someone in the industry that there are several companies that do the same work for the AdWords API that I spent dozens of hours training to do. It’d be pretty easy to get upset about potentially dropping a system you sank blood, sweat and tears into.

Getting something launched isn’t always the goal. It might sound corny, but the journey is the goal. Some of the solutions we’re considering don’t have connectors for APIs we need, so guess what? We still need that data engineering. It’s not all for naught.

2) When I learned Pandas (a Python library that helps you visualize data like you’re using Excel), I did everything in it! But it took longer. If I just wanted to replace “https://” in a URL with nothing, it’d take two seconds in a Google Sheet vs a few minutes in Pandas.

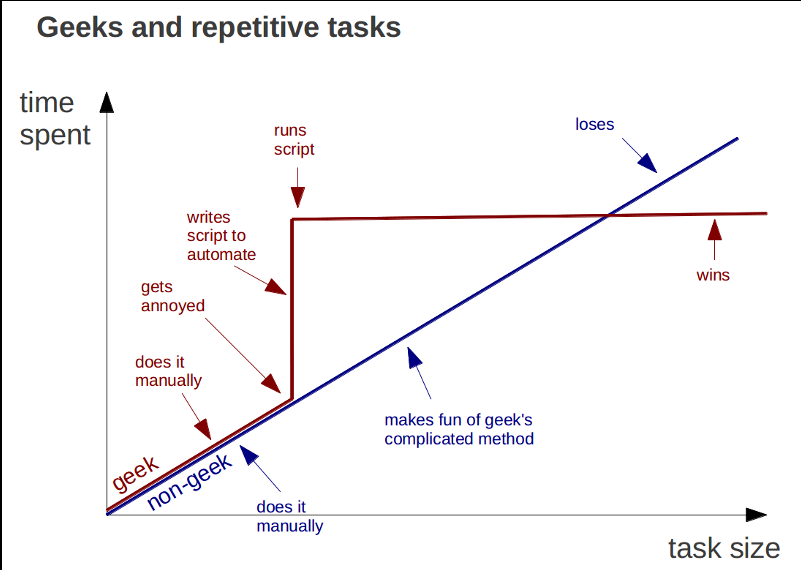

Here’s a great visual I came across that illustrates this point really well:

For really small tasks, Excel makes sense. Try piping a billion rows through it ... it’ll break in a second.

The whole point of the journey is that you learn skills along the way so you know not how but when to crawl, walk or run.