Helpful content … helps people.

8 years ago I had a vision, let's start interviewing our clients’ customers as they went through our site’s content and our competitors' sites. While we might only record 8 customers, we always learned things about the customer we NEVER would have and we also had a much better feeling about if our content was “helpful”.

"An algorithm will never tell me if my content is helpful or not, people will."

[TIP]

Try starting your audience research with the data you already have.

I’ll never forget the day I sat in on one of our content labs and heard a guy say, “If I had gotten to this page 4 years ago, it would have saved me several multi-day trips to home depot”.

When he said that during our audience interview - we knew we had something big for that client to invest in, not because it had the right word length and the right entities mapped, it is because we heard something from this man that no keyword research would have ever uncovered ... multiple trips to the hardware store stinks ... the better your content is, the fewer trips you can make.

So when we decided to look into this update, I was hoping Google got better at that kind of sentiment, algorithmically, if anyone could it would be them. Boy was I wrong :)

Analysis Methodology

Let's get into our approach.

We took 1,560,987 keywords and pulled the top 100 URLs for each of those keywords.

We pulled on the following dates:

- Pre-Update: August 12-14, 2022

- Post-Update: September 9-10, 2022

That resulted in 31,550,933 URLs analyzed across 2,703,210 domains.

We run all these keywords for our clients on a monthly basis, as such we have a bias in our data set based on the industries of our clients. We don’t believe that impacts what we saw in a great enough way to be a concern.

We’re a few weeks following the completion of Google’s Helpful Content Update rollout. Over time, I plan to work back through my hypotheses after Google announced the change as more data continues to roll in. But for now, this blog post is meant to show you some of the sights and sounds we see in our data, so you can have better starting points for exploring your data.

Analysis Findings

Only got 1 Minute?

Big takeaways:

#1 - If you are not in the business of creating spammy sites with spammy links don’t do anything, yet.

#2 - From what we can see very few quality domains got hit out of the 2.7 million domains we pulled.

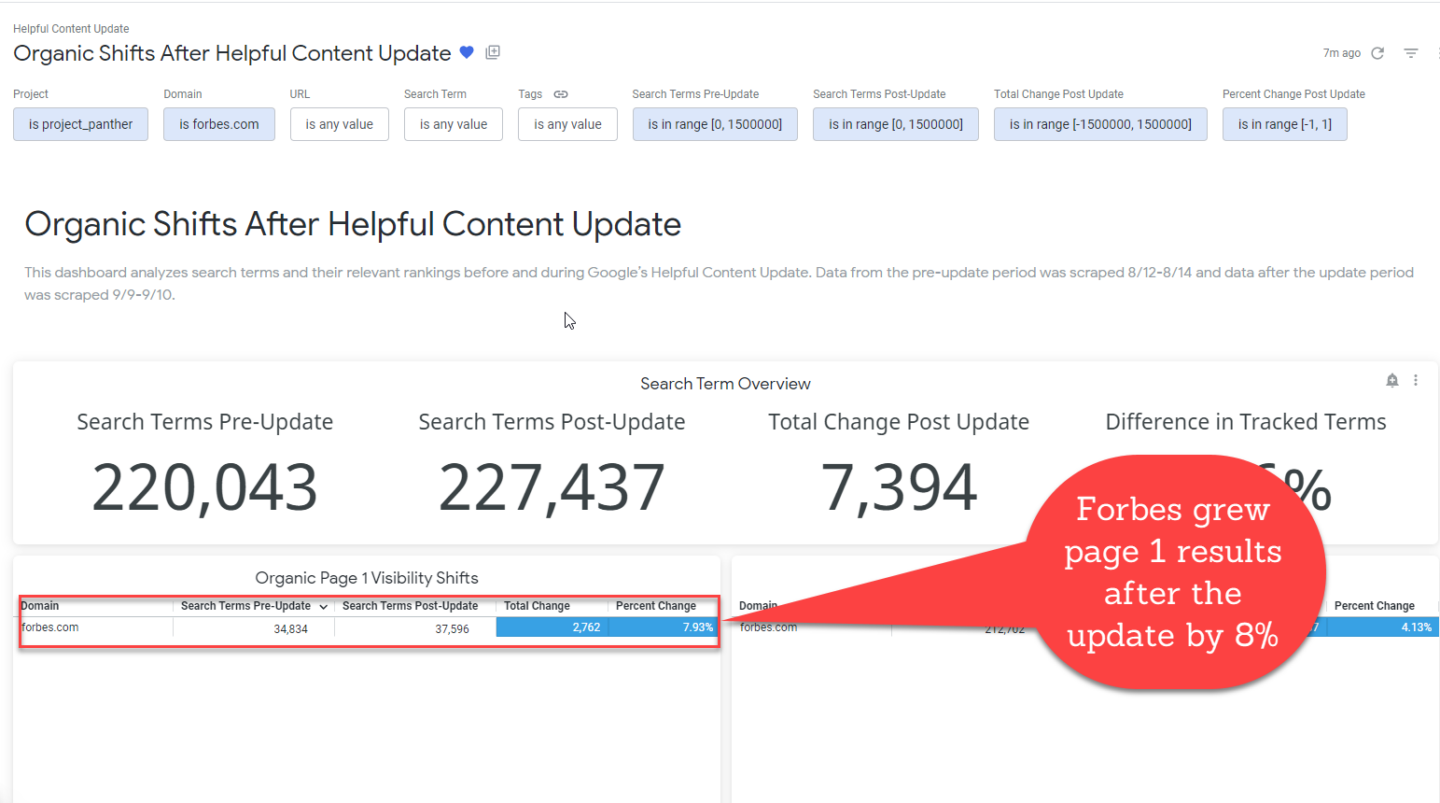

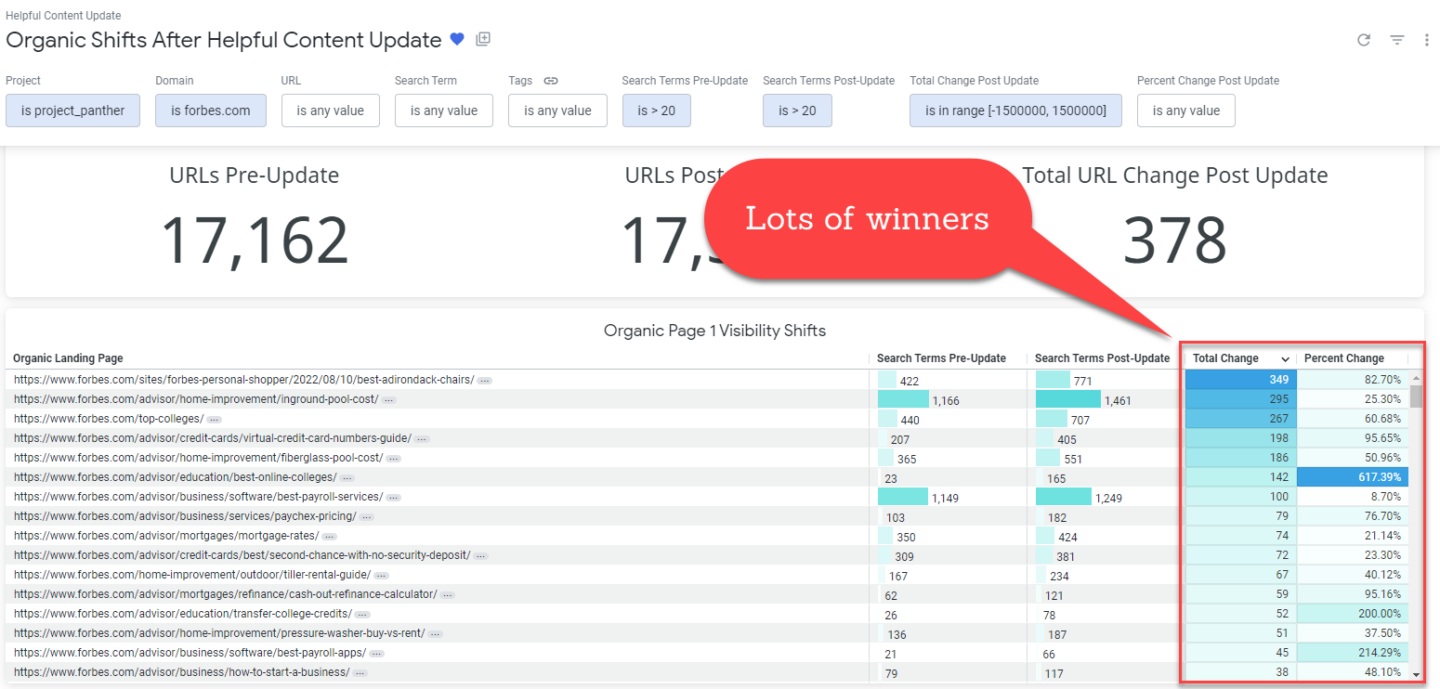

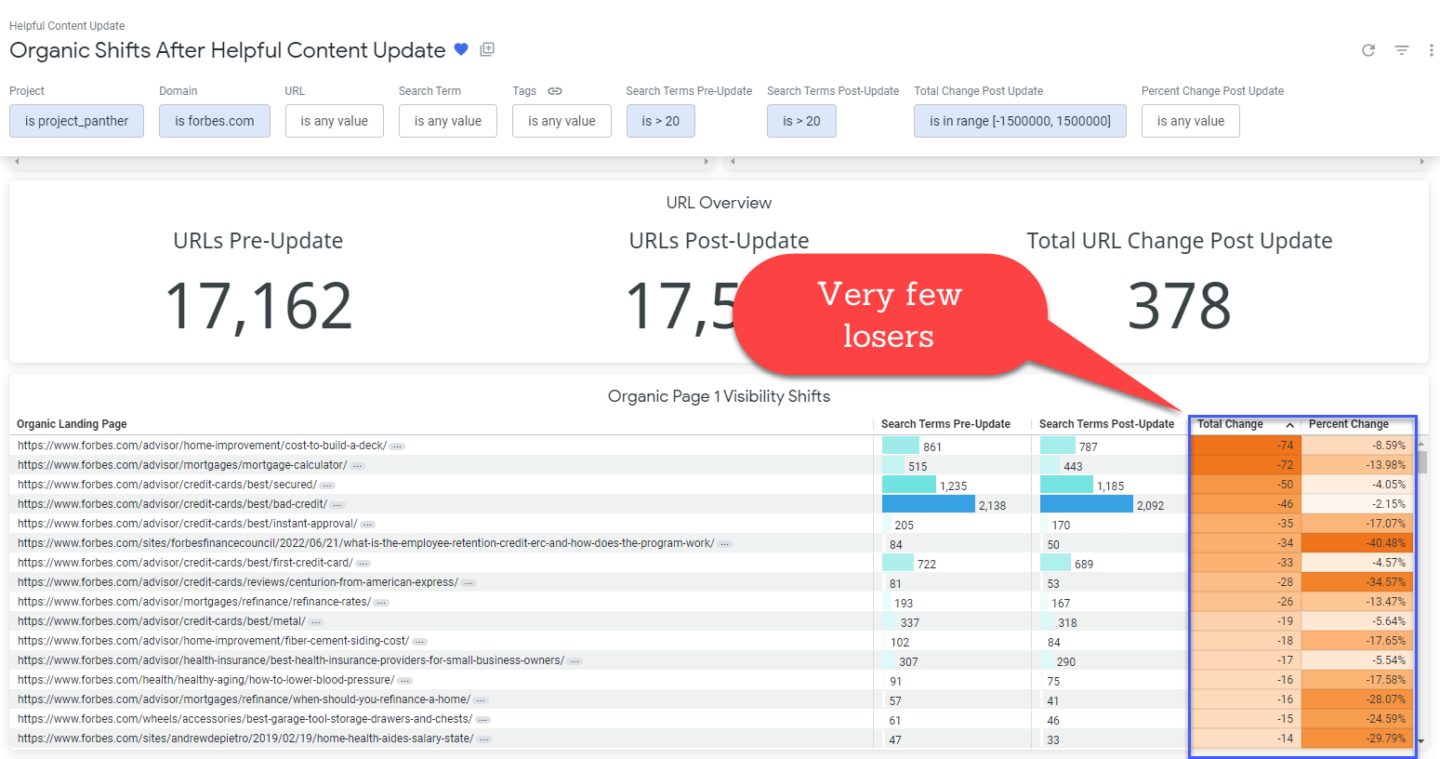

So my prediction that pages like this one on Forbes might take a hit … boy was I wrong about that.

Got 5 Minutes? (or more)

If you wanted to dig in a bit more on what we saw move and not move, then you can get our exported list of domains & URLs below that got hit or grew after the update. This might help you seek out some of your own analyses. We paid a ton of money to get all this data, might as well open it up for you all to evaluate too, please just share back with us any of your findings on Twitter.

Note: I used Majestic’s Trust Flow metric as a way to the hypothesis that Google wasn't hitting any sites that seemed to be real brands, this would tell me that bigger brands are safe for now, but should be developing a plan B in case they have some low quality, mass-produced content.

Trust Flow is a score from 1-100 where sites linked closely to a trusted seed site are given a higher score.

TLDR - for domains that had at least 50 keywords ranking in the Top 100 before

- 34 = # of domains that lost all their top 10 rankings (list) avg trust flow is 12.

- 1,526 = # of domains that lost all rankings in the top 100 (list) avg trust flow is 3.2

If you have built a LOT of content quickly and have managed to get some of them ranking in the top 10 and you have low domain trust and the content is “algorithmic-like” be careful.

If you’ve produced a lot of content quickly, have mostly top 100’s but not a lot of top 10’s, and have extremely low domain trust, it’s probably not even worth doing, as you’ll be the easiest to knock out.

Note: there are many reasons why domains might lose all their traffic. If you’re seeing declines in your data, diagnose your traffic losses before taking action.

For domains that had 1,000 - 5,000 keywords in the Top 100 pre and post and lost 50-90% of their rankings.

- 4 = # of domains who their top 10 rankings (list)

- 80 = # of domains that lost 50-90% in the top 100 (list)

For sites that lost the majority of their rankings,

For domains that had 1,000 - 5,000 keywords in the Top 100 pre and post and gained 50+% in their rankings.

- 0 = # of domains gained 50% in top 10 rankings

- 101 = # of domains that gained 50+ % of all rankings in the top 100 (list) avg trust flow is 32.4

The average trust flow of the domains that gained rankings was significantly higher than that of those who had lost rankings (32.4 compared to 3.2). Domains that gained 50% or more rankings had not (yet) earned new visibility on page 1 following the update.

Analysis Walkthrough

Winners

This decks .com page increased # of page 1 rankings post update by 51%. https://www.decks.com/how-to/articles/best-composite-decking-materials-options

Observations:

- Custom imagery

- Authoritative domain

- No recognizable pattern in the content

Deckbros’ Trex Deck Problems page grew # of page 1 rankings by 905% from 56 keywords to 563. https://deckbros.com/trex-deck-problems/

Observations:

- Content structure starts out in a formulaic pattern and then expands into longer paragraphs

The deck building cost guide from Homeserve page increased its page 1 rankings by 367% (from 823 pre-update to 3,848 keywords following the rollout).

https://www.homeserve.com/en-us/blog/cost-guide/build-deck/

Observation:

- Content structure feels like it’s written by a real person, less formulaic

Losers

Decoalert’s 10x10 deck cost page’s page 1 rankings fell from 111 keywords to 32 (-71%). (ahrefs) Their 20X20 post also lost 62% of its page 1 rankings.

https://decoalert.com/how-much-does-it-cost-to-build-a-10x10-deck/

https://decoalert.com/how-much-should-a-20x20-deck-cost-20/

Warning: Watch out for individual URLs that lost ALL RANKINGS we’ve seen a move from http to https or a new URL with the same content due to an update or refresh, so look for a URL gainer for the keywords to offset a URL loser before you ring the alarm.

Observations:

- Large table of contents including a range of sizes (may not be applicable to a single query)

- Domain with low authority/trust likely won’t succeed with this approach

- Formulaic approach to content structure (a question heading followed by a certain amount of words, a question heading then a certain amount of words, rinse and repeat)

- Likely easy to detect and may lack value for the reader

- Number of organic pages increased from ~2k in Jan 2022 to ~8k in Aug 2022

- Estimated organic traffic decline following the helpful content update

- Page 1 organic keywords decline following the helpful content update

San Diego Contractor News’ page lost 96% of its page 1 rankings (in our data set), declining from 65 keywords prior to the update to 2 keywords after. https://sandiegocontractor.news/how-much-does-a-20x20-composite-deck-cost/ (ahrefs) Observations:

- Large table of contents, covering a range of sizes

- Pattern in content structure, potentially identified as automated content

- Declines in estimated organic traffic and page 1 keywords following the helpful content update

Household Advice’s comparison page lost 95% of its page 1 rankings following the update (from 821 keywords to just 42).

https://householdadvice.net/timbertech-vs-trex/ (ahrefs)

Observations:

- Large table of contents

- Content structure follows a formulaic pattern

- Estimated organic traffic and page 1 visibility (number of keywords) fluctuations surrounding various Google updates

The Forbes Prediction

Wrong.

See ‘#1 Stay in Your Lane’ in my Helpful Content Update Predictions post.

To me I thought Forbes, which is a financial site, writing content about picking the best tires was going to be an example of people getting out of their lane. I was wrong. This page is still ranking top 3 for the query best truck tires.

What We Know About the Helpful Content Update

Attempts to Label Content

The update will contribute to ensuring that search results contain original content written by people and for people.

In the same way, I was showing some of the table of contents examples you are going to want to sharpen your eye on what could be seen as automated content. I think Kevin Indig’s case study is one of the best approaches to testing your own data to see how “automated” it sounds.

One of Many Ranking Factors

The helpful content classifier is one of many signals that leads to rankings in search.

Google stated that this update contributes to rankings but even if deemed unhelpful, there could be no impact.

“sites classified as having unhelpful content could still rank well, if there are other signals identifying that people-first content as helpful and relevant to a query.”

Why would this be the case? Several reasons, but if most of your content is deemed “helpful” then a page that could trigger the algo slap, might be spared because you typically write helpful answers to people's questions. Personally, I could see brands playing a role here too, bigger brands send different signals that Google can process very well vs brand new content sites that launch thousands of pages overnight.

Continuous and Learning

Classification is automated and uses ML

This model is in its infancy in terms of the eventual impact it could have on how content is classified and search results. What we know is Google has some of the smartest AI & machine learning talent on the planet, we also know Gmail was in beta for 5 years, meaning google is comfortable launching something and letting it improve over time (vs apple who obsesses about getting it right at launch).

Site-level Not URL-level

Potential for site-wide impact

A site’s ratio of content classified as unhelpful compared to helpful may not be fully assessed yet. It could be the case where a site has a ton of content classified as helpful but the sheer volume of content that is ‘unhelpful’ could impact the currently performing content … over time.

3 Things You Can Do Today

Developing hypotheses about “unhelpful content” - what trail does unhelpful content leave behind, high bounce rate, 1-page exits, etc whatever it is, develop one and monitor your content to see if you feel you are out of balance.

I said it above and I’ll say it again, talk to your customers, another way is to use a tool like HotJar to put questions at the end of your content asking customers if they could rate it on its helpfulness scale. (Warning, when we did this on our own site, we got very few responses, but some insights are better than 0).

[TIP]

Learn how to optimize for people: Why User Research Matters for Marketers

Get really good at understanding how your customers’ language is changing, this post is 6 years OLD but the ideas still apply, Ngram reviews, understand pain points, write to those: How to Write Better Ad Copy Using Reviews Ngrams

Lastly, get really good at extracting PAA’s and understanding the questions on people’s minds before writing your content: [Video] Use "People Also Ask" At Scale To Write Content

Hope you found this...helpful :)