Many people have noticed and commented about the recent changes to how Google Analytics handles how sessions are reported or how image referrals are tracked. As part of reviewing the effects these changes may have had on our clients, I wanted to dig into our Analytics to make sure that our data is as accurate as possible. One of the great things about working at an agency is you have tons of data sources in all sorts of industries and verticals, so identifying systemic issues becomes much easier.

However, when digging into this data I found some startling things results that date back well before the above-mentioned changes went into effect. To be clear, the below examples are not (in my humble opinion) a result of or even correlated to the recent changes to Google Analytics, but are certainly something you should review when validating your past and future numbers.

Referring Keywords

Now, the first place I turn when validating data is always Google Analytics, the source of data (imagine that!). When I was taking a look at some data for a client that saw increases as of late, at first things looked great; our targeted keywords were moving in the right direction and there were no abnormal spikes across one or two keywords that would indicate spam or faulty reporting.

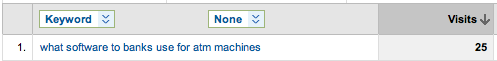

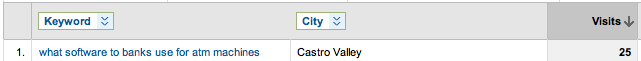

But just as I was cinching up my party hat and lacing on my dancing shoes, I noticed some strange long-tail terms driving traffic. Take this example:

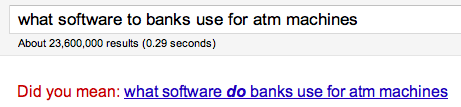

Not only is this an 8-word phrase that is only vaguely related to the client in question, the grammar is all out of whack. In fact, Google even tried to correct me when I search for this:

So now that this has caught my attention, I wanted to dig a little deeper.

Please Note: I recognize that 25 visits is not something that will necessarily make-or-break our reporting. However, this is just an example of how this phenomenon was observed over a bunch of keywords across multiple clients.

Now, we know that we recorded 25 visits over the past month, but how were these split up?

Hmm, ok. Already Im scratching my head but at the same time, our judicial system requires us to prove beyond a shadow of a doubt, so maybe a news story or a algorithm shift got us some temporary rankings for this phrase. Skeptical as I was, if these visits were legitimate, you would think there would be some variation in the source, right? Well, according to the same Google Analytics that tells us we got a spike in visits for this keyword, all of these visits came from the same City:

Note: When changing our location to Castro Valley, the website did not appear until the #11 result on Google Chrome Incognito (to prevent personalization bias).

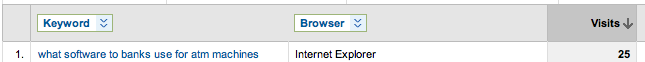

The visits also all came from the same browser:

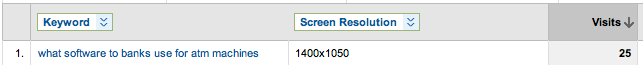

And even the same Screen Resolution:

So now that Im pretty confident there are some issues here, I wanted to give 2 more examples of where were seeing this issue arise under different circumstances.

Do I Have a Stalker?

In a client call earlier this week, we discussed the curious fact that one of the top traffic-driving keywords for the entire month of June was the name of one of the Sales Executives. Now, if this were the CEO or Directory of Marketing that just spoke at a conference or was featured on the news, that would be one thing. No, this was a Sales Executive who herself was a little confused as to why her name would drive so many visits to the corporate website. When looking into the data, we saw a lot of the same consistencies we referenced above: same browser, operating system, city, screen resolution, etc. However, what was different about this example was that the visits were actually dispersed over several days:

Let's Put it to the Test!

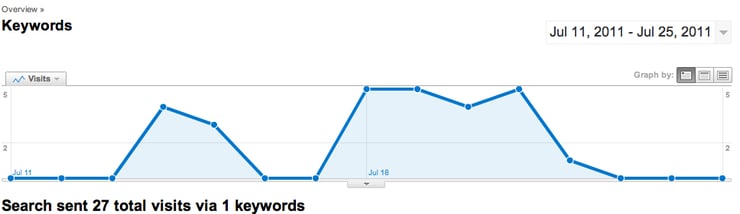

To figure this out, the client performed a test; they copied a random block of text from their homepage and clicked through to their listing in Google. What happened next gives us the greatest cause for concern: over the course of the next week, the client did not close the tab by which he had accessed the SERP listing. To be clear, he did not revisit this tab, refresh the tab, or re-search for the query in Google. However, over the course of a week the 1 visit that he made as a test was actually being recorded as 27 visits in Google Analytics:

You will notice that there were no visits recorded on Saturday and Sunday, when he was out of the office and not actively using his computer. While we're not certain what caused this activity, there are some speculation that it may have been an auto-refresh in the browser or that the visits were recorded as the computer recovered from "Sleep Mode," but we have no confirmation to this point (We are, however, currently testing if this might be the case)

Once the tab was closed and the cache was cleared on Friday, July 22, these visits were no longer being recorded and the traffic for the keyword returned to zero.

They Just Cant Get Enough!

The last example we have shows how we can look to recognize potential faulty reporting by looking for abnormalities in your metrics. The term in question is one that weve been tracking for quite some time, so we know for a fact that rankings on this term have not moved over the course of the past 2 weeks. However, this week we saw visits spike with no discernible explanation. The Sherlock Holmes in me was, once again, intrigued. How did a term that experienced no rankings movement, no notable new references, and no changes in search activity that I could distinguish spike so much?

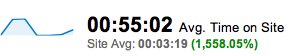

Rather than rehash the above points, I suggest you also take a look at any abnormalities in pages per visit or average time on site, as these will show you places where there was unusual (and potentially incorrect) reporting of the activity taken on your site. Normally, we wouldnt place too much stock in these metrics but for the sake of this argument they do provide a valuable insight into shortcomings of Google Analytics. You will notice from the below that we saw absurd increases in these metrics:

The biggest thing to look at here is the comparisons to the site average: if we averaged 55 pages per visit, this wouldnt be remarkable. However, a 1300% increase in pages per visit and almost 1600% increase in average time on site is even more of an indicator that there is something wrong with how this data is likely skewing your results to appear more favorable.

So what does this mean for you?

Before you celebrate monumental wins (or losses for that matter), you should always validate the data. Just as we should always consider what strategy may have caused this? we should also consider what reporting deficiencies may have caused it.

Another suggestion is to look at Unique or Absolute Visitors, which from our review seem to provide more realistic numbers and are not affected by (for example) the changes to how sessions are being recorded.

Finally, always make sure youre looking beyond your top 3, 5, or even 10 keywords. While a reporting error of 25 visits (as with our first example) may not be a huge deal, a 25-visit error across 50 or 100 keywords can, in the aggregate, seriously hurt the validity of your data.

Are you seeing similar results in your data? We encourage you to share your thoughts or feedback in the comments section below. If you have specific questions you can also feel free to reach out to Brett on Twitter.