Ever since ChatGPT was released in November 2022, digital markers have been continually testing and pushing the boundaries of the tool.

Seer is no exception - from keyword categorization to internal linking, we’ve been on a mission to figure out how ChatGPT can drive efficiency to benefit our team and clients.

Our culture of testing was highlighted within Anthony’s post from May 2023, regarding using ChatGPT to write meta descriptions too.

Spoiler alert: Anthony’s test uncovered that using ChatGPT led to decreased performance.

However, one of his noted variables was the small sample size of 4 pages in the test.

So, we decided to test how the results may vary with a larger sample size.

Test Results TLDR

When it comes to writing meta descriptions with ChatGPT vs having our team manually write them, this is what we found:

+176% lift in click-through-rate manually writing vs ChatGPT-4

-21.5% decline in CTR for pages with ChatGPT-4 written meta descriptions

>6x faster to write meta descriptions with ChatGPT vs manually

While there are benefits of utilizing ChatGPT, the resulting performance is not comparable enough to justify utilizing this tool exclusively for meta description optimization.

There are multiple variables at play, requiring the need for a third test. Read on for a more detailed breakdown of this test.

Hypotheses

- If ChatGPT-4 can provide meta descriptions with comparable performance, then we should utilize the tool more often than manually providing meta description optimizations.

- If Google overwrites meta descriptions and titles, then we should limit the time we spend writing them from scratch.

Methodology

Test groups

We categorized a list of 237 pages from the Seer blog into three groups:

|

Method |

Number of Pages in Group |

Author |

Process |

|

Manual Test Group |

57 |

Our current process of keyword research and bespoke titles and descriptions. |

|

|

GPT Test Group |

90 |

ChatGPT-4 + LinkReader plugin |

We used the LinkReader plugin so the tool would be able to crawl the content of each page to provide relevant meta descriptions. |

|

Google Test Group |

90 |

N/A |

We created this group with the intention of leaving all title tags and meta descriptions blank for Google to automatically fill in. |

Generating the Meta Descriptions

ChatGPT-4 Meta Descriptions

We “trained” ChatGPT-4 by asking the tool to explain what an SEO optimized meta description and title tag would look like and to “act as an SEO practitioner” to ensure it was as up to the task as possible.

Google Meta Descriptions

As mentioned, the Google test group’s meta description was initially intended to be left blank. However, we discovered that Hubspot has a limitation and won’t allow the publishing of a page without these elements.

Due to this, we added each article's name in the title tag and followed two options for the meta description:

- Left as a period. (Example)

- Or input “We are not including an optimized meta description here to test how often Google rewrites meta description .” (Example)

We did this so the meta descriptions wouldn’t contain any information relevant to the page and give Google full rein to update as it pleased.

Measurement

Our measurement plan included standard SEO metrics:

- URL Click-through-rate (CTR)

- URLs rankings

- # of rewritten titles and descriptions

We also assessed efficiency (time spent) using ChatGPT-4 for meta descriptions vs. the typical manual process.

Results

After a month of testing our meta description optimizations, here’s what we saw for each group:

|

Method |

CTR (Pre) |

CTR (Post) |

CTR PoP % Change |

|

Manual |

0.96% |

1.12% |

+16.26% |

|

|

0.25% |

0.27% |

+10.55% |

|

ChatGPT-4 |

0.17% |

0.13% |

-21.48% |

SEO Metrics

These metrics help us determine what actually performs best in terms of driving traffic to the website.

Winner: Manually Written Meta Description s

After testing these groups over the course of a month, the Manual group outperformed both ChatGPT-4 and Google methods.

ChatGPT-4 was the only group that experienced a decrease in CTR by -21.5%.

Manual Method CTR Data:

- +176% lift vs ChatGPT-4

- +54.1% lift vs Google rewrite

- +16.3% lift vs previous period Manual CTR

Efficiency Metrics

These metrics help us understand what lowers the level-of-effort for the practitioners creating the meta descriptions.

Winner: ChatGPT-4 Written Meta Descriptions

ChatGPT-4 enabled us to complete meta descriptions >6x faster than the manual process.

ChatGPT-4 Method Efficiency Data:

- 2.5 minutes/page vs 19.1 minutes per page manually writing meta descriptions

- 3.75 hours for 90 pages vs. 18.12 hours manually writing meta descriptions for 57 pages

What’s Next?

So I shouldn’t use ChatGPT for Meta Descriptions?

We don’t believe that it’s time to give up manually writing meta descriptions yet, but "don't use ChatGPT for Meta Descriptions" is not the conclusion we’ve drawn here.

This technology is evolving at a quicker clip than we’ve ever seen before, so we will continue to test and learn in this space.

The primary benefit of using ChatGPT-4 is the efficiency, however, at this time it doesn’t seem like the performance is comparable enough to justify generating meta descriptions exclusively with this tool.

Next test

Due to the multiple variables in this test, our next test is going to be swapping half of the Manual group with meta descriptions written by ChatGPT-4, following a similar process as before.

Will this change result in a significant shift in CTR once variables are as consistent as possible? Stay tuned.

In our next test, Our Data Platform will be utilized to a greater capacity.

We’ll integrate both paid data (such as converting keywords) and organic features (such as People Also Ask questions) in order to provide ChatGPT additional context for meta description optimizations.

Additional Data + Test Variables

Organic rankings

Did it impact the test? Yes.

The average organic ranking across the test periods experienced minimal shifts, so we can’t determine a correlation between organic rankings and meta description methods.

While an incremental increase, the ChatGPT-4 group experienced the largest improvement in average ranking across test periods. This ranking shift did not drive an increase in CTR, possibly further highlighting the decreased performance of utilizing this tool for meta descriptions.

|

Method |

Beginning Avg. Rank |

End Avg. Rank |

Difference |

|

Manual |

18.0 |

17.6 |

-2.2% |

|

ChatGPT-4 |

22.3 |

21.4 |

-4.0% |

|

|

23.3 |

24.3 |

+4.3% |

Rewritten Meta Descriptions

Did it impact the test? Yes.

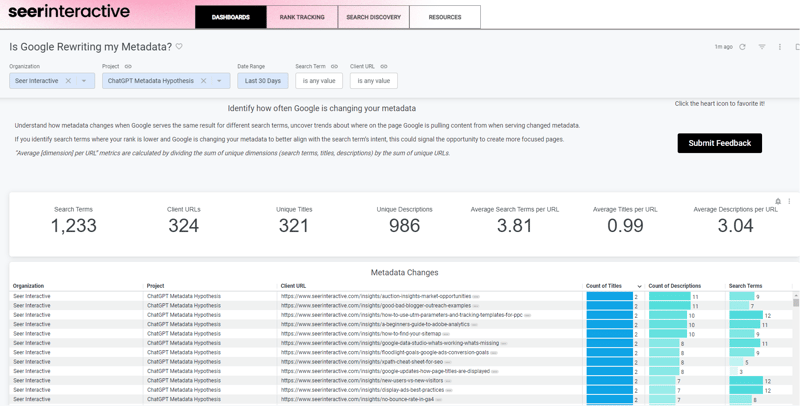

We used our cloud-based data platform, in order to analyze how often our implemented meta description was rewritten by Google in the search results. Here’s an example of how that report is viewed within the product’s dashboard:

Manual written meta descriptions were rewritten 130% more often than URLs in the Google-written group. This includes the Screaming Frog Guide page’s meta description that was rewritten 17 times over the course of 30 days.

|

Method |

Avg. Titles |

Avg. Descriptions |

|

Manual |

1.30 |

3.77 |

|

ChatGPT-4 |

0.74 |

1.72 |

|

|

0.85 |

1.64 |

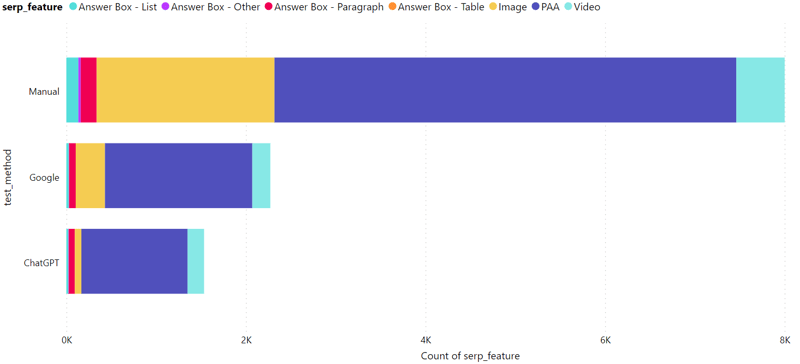

SERP Features

Did it impact the test? Yes.

The Manual group was shown alongside 8,001 different SERP Features, compared to 2,272 and 1,534 for the Google and ChatGPT-4 groups, respectively. This gap can be attributed to the better average ranking of this group compared to others as shown in the Organic Rankings table above.

People Also Ask results were, by far, the most visible SERP Features, however Image results drove the highest CTR when visible alongside our testing pages.

|

SERP Feature |

Count |

CTR |

|

PAA |

7,968 |

0.99% |

|

Image |

2,378 |

1.42% |

|

Video |

924 |

1.01% |

|

Answer Box - Paragraph |

318 |

1.28% |

|

Answer Box - List |

183 |

1.34% |

|

Answer Box - Other |

34 |

0.48% |

|

Answer Box - Table |

2 |

0.00% |

Impressions

Did it impact the test? Not as much as expected.

One group having an outlier of impressions could increase the margin of error for our test. Measuring the impressions of each group is important in order to understand if each test method had a comparable sample size of CTR data.

As expected, the Manual group had the largest amount of impressions. However there was just an 11.4% (21,177) difference between the high and low impression groups.

|

Method |

Impressions |

CTR |

|

Manual |

206,364 |

1.12% |

|

|

196,151 |

0.13% |

|

ChatGPT-4 |

185,187 |

0.27% |

While the pages within the Manual grouping were viewed the most, there was only a 5.2% difference in impressions compared to the Google group, despite a 38% average ranking difference.

Conclusions

We know that we’re still in the early stages of what will be possible with AI for SEO.

Sign up for the Seer newsletter or get in touch with us to hear more about our tests and how we’re using AI to impact our team and clients.