Google held the first Google Webmaster Conference in Mountain View, CA on November 4, 2019. The Monday was filled with lightning talks from Googlers from various teams, with Danny Sullivan as the MC.

Conference Recap

The conference followed Chatham House Rules, meaning that I cannot share the names of anyone who spoke. However, I can share what I learned with you all. There were lightning talks, Q&As, and presentations about structured data, emojis in search, deduplication, crawling and rendering, search language and more. There was even a product fair, where webmasters got to spend a chunk of time talking to various Google Search Product Managers one on one, and ask questions and provide feedback. While each session was valuable, I’m sharing what I found to be the most valuable sessions, with the biggest takeaways.

Language Used for Search

Synonyms for Search:

One Googler shared how Google uses synonyms to understand queries, but sometimes they get it wrong. Google did a great job of breaking this down.

- How synonyms in queries work:

Image Courtesy: Google

- Contextual: Synonyms depend on other query words

- Google shared a story about a time when their top result for the query “united airlines” was Continental Airlines (rivals, and did not mean the same thing). This is one example of how Google identified that similar ≠ interchangeable. Google then developed their “Siblings,” which are oftentimes rivals (cats and dogs).

Non-Compositional Compounds in Search:

- A compositional compound is “a phrase of two or more words where the words composing the phrase have the same meanings in the compound as their conventional meanings.” A non-compositional compound is one where the meanings differ.

- One example of a non-compositional compound is “New York,” which cannot be shortened to “New” or “York.” New Jersey, on the other hand, can be shortened to “Jersey” while keeping the same context of the query.

Image Courtesy: Google

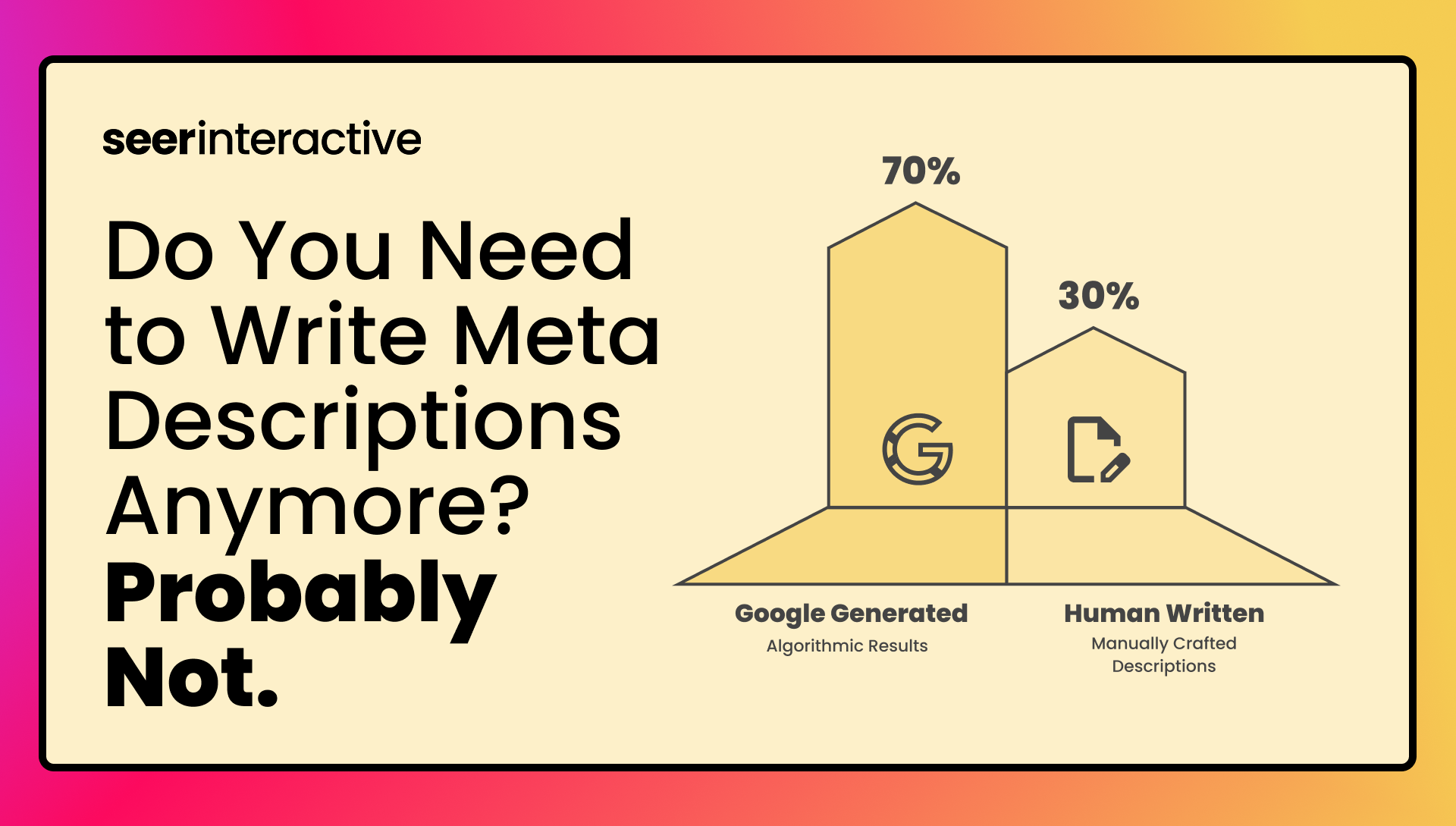

Emojis in Search

- Search ignored the use of emojis and other special characters initially. The reasons were:

- Nobody searches for them

- Expensive to index if they’re not used

- Over time, the “nobody searches for them” narrative changed. Google estimated that people were using emoji in >1 million searches per day

- Now, emoji can be searched for and can even carry link equity if used as “anchor text” (although I would still recommend using regular text to link)

Structured Data in Search

We had the pleasure of hearing from the Product Manager for Structured Data who shared the evolution of search and the importance of structured data. This lightning talk discussed how SERPs have looked in the past vs. today to highlight the increase in SERP features meant to better engage the searcher, and why structured data is important for gaining visibility in these features.

There wasn’t much revealed that most SEOs don’t already know around structured data.

Why You Should Implement Structured Data

SERPs Are Changing

Image Courtesy: Google

Google’s goal is to provide the best search experience available so that users keep using their search engine instead of competitors like Bing or Duck Duck Go. Part of the search experience includes providing visually-appealing results to help users in the discovery portion of their search and guide them to a site that can answer their query. Adding structured data helps Google understand your page and enable special features.

Image Courtesy: Google

Web Deduplication

Web deduplication is the process of identifying and clustering duplicate web pages, picking a representative URL and indexing unique pages, and forwarding signals to the representative URL, otherwise known as the canonical URL. Google deduplicates because users don’t want the same page repeated to them, it leaves more room for distinct content in the index, you retain signals when you redesign your site, and Google can also find alternative names.

A Googler walked through the deduplication process and shared these suggestions:

Tips for Deduplication:

1. Use redirects to clue Google into your site redesign

2. Send Google meaningful HTTP result codes

3. Check your rel=canonical links

4. Use hreflang links to help Google localize

5. Keep reporting hijacking cases to the forums

6. Secure dependencies for secure pages

7. Keep canonical signals unambiguous

Rendering

Rendering allows Googlebot to see the same thing on the internet as we do. To no surprise, Googlebot renders with Chrome. So, Chrome renders a page and loads content, then Googlebot fetches that content and loads it. Once the content is loaded, Google takes a snapshot of the content and that is what’s indexed.

Google faces two challenges when rendering content; Fetching and JavaScript.

Fetching

Fetching is a problem due to limited access (robots.txt) and limited crawl volume (Google does take your servers into account and won’t over crawl your site to help you avoid server issues).

Image Courtesy: Google

The Googler did say that when fetching, corners will be cut by:

- Google does not obey HTTP caching rules (so don’t rely on clever caching)

- Google might not fetch everything (minimizes fetches)

JavaScript

There is a lot of JavaScript (JS), so Google has to run a lot of it. Google is constrained with CPU so they don’t want to waste resources. Google will interrupt scripts if they need to. Basically, don’t overload with JS and make sure it’s all useable.

Popular Ways to Fail with JavaScript

- Error loops (robots.txt, missing features)

- Cloaking

- Cryptocurrency Miners

Googlebot & Web Hosting

This lightning talk was separated into 3 sections; How has HTTP serving evolved? Robots.txt explained, and Google crawl rate.

How Has HTTP Serving Evolved?

Google shared HTTP server popularity changes from 2008 - 2019 and we can see that Apache is losing market space to Nginx and MicrosoftIIS (see image below). They shared that traffic is shifting to HTTPS (no surprise there) and that HTTP fetching is getting faster.

Image Courtesy: Google

Robots.txt Explained

Robots.txt is a common way for webmasters to specify access to their websites. This is not a standard and is interpreted in a different way by different search engines. Google is working with other search engines to define a standard for robots.txt files, but it’s not there yet.

Googlebot has to check the robots.txt for every single URL that they crawl. The robots.txt has to be fetched before the content, but sometimes that fetch can fail. If the fetch fails and Google gets a 200 or 404 error, no worries. If Google fetches and gets a 5xx error, it’s OK, but only if it is transient. If the robots.txt is unreachable, that’s bad.

Image Courtesy: Google

26% of the time, a robots.txt is unreachable (you can’t get indexed if you can’t be reached)!

Crawl Rate Limiting

Googlebot has a sophisticated way to determine how fast to crawl a site. As a webmaster, you can set a custom crawl rate in search console if needed, but it’s only recommended to use this feature if you need crawling to slow down. Googlers said to leave crawling to Google unless your server is being overloaded.

Action Items from the Google Webmasters Conference

- Focus on people, not search engines

- Continue to monitor your Robots.txt

- Index bloat is real. Keep your site structure clean to help Google deliver your content

- Make content more “cacheable”

- Reduce wasteful JS

- Utilize structured data

- Focus on people, not search engines (it’s that important)

To summarize everything in one paragraph, Google is trying to provide the best answer to a query while also providing an optimal experience for the searcher. Make sure your site is technically sound (proper robots.txt, sitemaps, canonicalization, etc.) while writing the actual content for people and use structured data to help Google understand your content and how it should be displayed. Google is constantly making updates to their algorithm and other search products, so it doesn’t do any good to “chase the algorithm.” Focus on giving people what they are looking for in the appropriate channel and medium.

Other Summaries of the Event

- Jackie Chu’s Notes from Google’s Webmaster Conference Mountain View: Product Summit 2019

- Barry Schwartz’s Takeaways from the Google Webmaster Conference Product Summit

- Barry Schwartz’s 5 tips and trends from Google Webmaster Conference

- Check Twitter for the hashtag #GWCPS

Staying Up to Date With Search

Subscribe to our newsletter to stay up to date on industry news and trends, and expand your industry knowledge!