Occasionally, I find myself connecting with random memories from my childhood that have no reason standing out in my brain. Coincidentally, I remember a movie I saw at age 9 titled The Amazing Panda Adventure. It was a terribly acted family movie where a young American boy visits China and winds up helping his father (who is a zoologist) rescue a panda cub from (and I’m quoting IMDB here) “unscrupulous poachers and saves a panda reserve from officious bureaucrats.” It seems almost fitting that Google would choose “Panda” as a naming convention for an algorithm through which I’ve found some well-intentioned websites get caught in the bear trap of Google’s algorithmic penalty box.

Full disclaimer: I totally get it. Approximately 99% of the time, Google gets it right. The amount of low-quality, spammy, worthless content Google has been able to weed out over the past four years as a result of Panda is amazing. I’ve had plenty of clients who had been “doing content right” get successfully rewarded following a Panda update. This article, if anything, is more to bring to light that maybe Google isn’t 100% there in getting it right every single time.

At Seer, we’ve always been supported to share everything on our blog, we don’t keep things to ourselves -- whether it’s wins, new tactics, failures, you name it. We’re hoping that the years of helping the SEO community will get us some karma back, as I share how we’re tackling a problem and what we might be missing.

What is Panda?

I suppose the main problem I have is that Google is ever-so-vague on what the specific problem might be. Sure, I’ve seen the best practices and the leaked reviewer checklists. But at the end of the day, without clear communication on what issues they have with a site’s content, there is no way to remedy a problem that cannot be clearly defined. Google has always been very secretive about ranking factors (and rightfully so). However, when an algorithm that can make or break your organic traffic includes on-site factors such as “writing quality content” -- a very subjective and non-quantifiable measurement, I’m hoping they throw us a bone.

Getting Caught in the Dragnet

We’ve all seen those sites that have unintentional duplicate content because their pagination isn’t setup correctly. This isn’t a spammy, malicious, or purposeful thing the site owner did. Instead, it’s a function of site architecture. At the very least, give us a Webmaster Tool messaging system so we can identify if we may have missed something. Google sent webmasters messages after the Penguin update and, in my opinion, more often than not, that’s an intentional penalty where the actions of the site owner cause the issue. You’re telling them what they already know, they built spammy links - but can you tell us whether you found duplication on our site, too many thin pages, etc.?

If Google’s end goal is to make the web experience better, it seems Google should offer up these types of suggestions for websites not living up to their standards. At the very least a barometer between “your parameters are causing duplication” and “your content is all bad, rewrite your entire site” would be helpful.

I know what you’re thinking, “read the leaked manual reviewer checklists/guidelines for site content.” We did just that and went as far as to create a checklist of our own to go through. And there is yet another strong case for a messaging system when it’s not even clear if your site was manually flagged by a reviewer or caught in an automatic algorithm.

When is Enough, Enough?

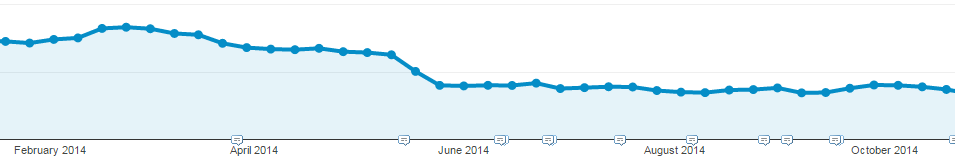

For the last nine months, the Seer team and I have been helping a client, Auto Accessories Garage, who was hit by the Panda 4.0 update. We’re at the point where we’ve tried everything in the book and we’re stumped. Do we keep tweaking? Do we just need to wait for an update? Without communication from Google, it’s difficult to understand next steps. Especially when they aren’t even clear on if Panda is continuously running or not.

The client gave their full blessing on publishing this article because they have nothing to hide. Some of the issues I’ll talk about were put in place for the explicit reason that they were trying to appease Google and not display duplicate content on their site. Everything is well intentioned: they’ve gone above and beyond most of the eCommerce sites I’ve seen and they were penalized with a loss of roughly half of their organic traffic overnight. We’ve implemented many changes to help improve their standing, reached out to several third party consultants, including a response from someone at Google’s web spam team who responded with:

“I don't see anything obvious at first glance - this is probably something where you'd get some useful feedback from user-studies. From trying a handful of queries (...) it's hard to point at anything specific that you'd need to do. I think you're on the right track, but keep in mind that quality is more than just tweaking content blocks and noindexing types of pages.”

Ok, I get it - yes quality is more than that but again, that’s a subjective opinion. Seer totally pushes this philosophy to our clients: Do what’s best for the user, provide value, and Google rewards you. The problem here is that I believe all of the features and experiences this site has put in place for their users just isn’t being processed appropriately by the computerized calculation that is inflicting these penalties.

Duplicate Content Conundrum

The site in question Auto Accessories Garage, is a family-owned car & truck accessory eCommerce retailer. I’ve found this vertical to be really interesting & challenging. Where a typical eCommerce site might have one or two configurations per product, in this case, one SKU may have hundreds or thousands of different configurations based on a vehicle. It’s the exact same floor mat but it’s shaped a bit differently to fit in an F-150 vs an F-250. You can see how the duplicate content here could get out of control.

So, what are your options? You can’t change the product specifications because you want to communicate actual valuable information to customers. And you also can’t feasibly write unique descriptions for a thousand different SKUs of the same exact product. You also want to rank for potentially all of these different configurations as you want to capture people searching for their specific vehicles. Most competitors in the space face the same problem and most of them deal with the duplication. But most of them haven’t been hit by Panda, so that’s where the problem lies.

To combat this issue, AAG took a unique approach. First, they wrote unique product descriptions separate from manufacturer descriptions. Ecomm 101, this ensured they were producing unique content, something Google has said is important. Then there’s the issue of duplication across multiple SKUs. Creatively, the team at AAG loaded this content dynamically via AJAX into their pages save for the main general product page. This way they’re not duplicating content across hundreds or potentially thousands of pages. They also blocked Googlebot from crawling these content “injection” pages in robots.txt

Major fix #1: When AAG came on board right after Panda 4.0 hit, there was a lot of chatter about blocking AJAX, JS, & CSS in robots.txt. We thought we had it cracked: unblock this because Google will not be able to load these pages and see all that unique content you have. This is creating pages that look very thin and it’s easy to see how the crawler wouldn’t understand. Not only did we unblock this content to allow Googlebot to crawl it, but we also no-indexed about 1.7 million pages that just weren’t getting much traffic or were deemed “less valuable.”

Panda 4.1 came and went with a small temporary improvement but fell back to normal lows a week or two later.

U/X vs. Googlebot/X

Another unique aspect of the vehicle accessory vertical that I’ve only ever previously seen on sites like Crutchfield is that the products available to you depend entirely on the type of car or truck you have. You can imagine how a site with products you couldn’t buy would be an extremely frustrating and confusing user experience (good U/X is also something Google encourages). Most sites in this industry have implemented a vehicle selector where you input the year, make, and model of your vehicle and results are filtered accordingly.

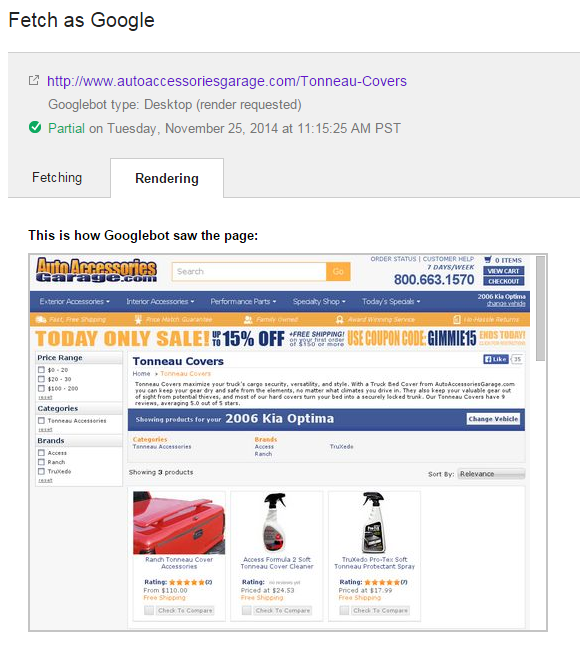

In early December, we saw something weird occurring in the “Fetch & Render” tool from Webmaster Tools. The category page would occasionally render with the vehicle “filtered” view. It seemed to be entirely at random and sporadic and we did multiple tests to make sure it wasn’t just a view our machines were showing based on previously visited pages. This became a huge problem when we saw that often, a vehicle was being selected where no products existed for that category. For example, tonneau covers (a truck bed accessory) do not exist for a Kia Optima, but Fetch & Render would show the tonneau covers category page as if an Optima was selected, thus showing a category page with no results. That’s a problem. Even further compounding this conundrum was that the Google cache would always display the page correctly without the “filtered” view.

Major fix #2: We weren’t quite sure if this was just a function of Fetch & Render or if this was indeed an issue with the way Googlebot was crawling the site. For U/X, once you select your vehicle, it’s cookied and carries across the entire site. To combat this, we introduced a mechanism that would prevent bots from carrying cookies across pages that resulted in improper rendering, while still allowing the bot to interact with the javascript on that page. We couldn’t find any compelling evidence or proof that Googlebot does indeed store cookies (it seems to have been a highly debated topic), however this view in Fetch & Render made it important enough for us to take a look at.

Oh My Stars!

Another very interesting development occurred a few months prior to Auto Accessories Garage’s Panda hit in late May with the loss of their review star snippets in the SERPs. The team at AAG checked, rechecked, and triple checked their review markup and made sure it was implemented correctly. We even took a pass to make sure there was nothing they were missing. Even more supportive of correct implementation is Google’s testing tool, which properly displays the review stars as intended. Google is also still selectively displaying these stars when you do a “site:autoaccessoriesgarage.com” search, but not in general queries.

Google has stated they can selectively display stars and other rich snippets based on site quality with a large amount of sites losing this privilege in the past. Given that these still haven’t returned along with Google not awarding us with a sitelink search box seem to indicate that we’re still not back in Google’s good graces.

The Whole Enchilada

I’ve highlighted what we believed to be the “big” issues that were tripping us up in the algorithm, but we’ve also implemented MANY small, incremental changes in the past nine months to move Auto Accessories Garage in the right direction. For pure transparency, I’ve outlined these changes below. This was sent to our Google contact who made the statement above. Again, we realize it’s more than no-indexing and tweaking content, but I think it’s fair to say we’ve done a great deal more than that (including creating this pretty hilarious content piece). At this point, I think the only thing we haven’t done is to completely rewrite every piece of content on the site (though we did do a bit of that, as well). I’m not saying we didn’t miss anything, but we’ve exerted so much effort trying to course correct since Panda 4.0 that when Google directly tells us “there is nothing apparent, go ask some users,” it makes me throw my hands up a little.

We’ve also dug pretty deeply into competitor sites and have seen many similarities and in some cases, even less SEO-friendly approaches to some of the unique challenges I mentioned above. I know that’s not to say they just haven’t been caught yet, but it also leads me to believe that this is more of an algorithmic miscalculation than anything else.

| Date | Action | Notes |

| 3/31/2014 | Review Rich Snippets Disappeared from SERPs | Review Star Snippets were lost however later the snippets saw a return in site: searches |

| May 2014 | Panda 4.0 | Site was hit by Panda 4.0 and lost roughly 40% of organic traffic |

| 6/17/2014 | No-indexed 1,733,992 /_Item/ pages that contain very similar content | Pages for vehicle variations of a product that received little to no traffic and/or revenue |

| 6/19/2014 | Unblocked Javascript & CSS previously blocked in robots.txt | Various Content, Reviews, & CSS was being pulled onto item pages (roughly 3 million) with Ajax that was blocked via robots.txt |

| 6/19/2014 | Added Vary: User Agent header in for mobile | To properly serve mobile pages |

| 7/21/2014 | Internal Search Pages Marked as noindex | Removes index bloat |

| 7/21/2014 | 301 redirects being used (instead of 302) | On non-www and https URLs to their respective www URL |

| 7/23/2014 | Removed Overly Optimized Year Internal links on VLP pages | Ex: “2014 Toyota Tundra Car Covers” to “2014” – Reverted change after unfavorable results to year-specific head terms. |

| August 2014 | Project began to rewrite the top 25-35 landing pages in each category to read more natural and be less “stuffed with keywords” | Content blocks were previously being “smartly” generated by pulling together different blocks of text and then manually/edited approved by writers. Top pages rewritten and then checked to make sure main keywords weren’t being over optimized by appearing too many times on the page. |

| 8/30/2014 | Database tweaks to drastically reduce loading/query time when pulling records | Followed by a drastic reduction in “time spent downloading a page” in WMT |

| 9/15/2014 | No indexed /Order-Status subfolder | Identified in Google Index |

| 9/15/2014 | Added nofollow to shopping cart internal links and no index on cart subfolder | Identified in Google Index |

| 9/25/2014 | Multi-day rollout of Panda Update | Traffic up slightly, not a complete rebound however levels returned to normal lows a few weeks later |

| 9/30/2014 | Added recent customer review block to roughly 500 vehicle landing pages. | More unique content added to differential each page |

| 10/7/2014 | Change instituted where customer review snippets pull in more content from the review before “read more.” | Adding more unique content |

| 11/12/2014 | Updated Internal Footer Links to be less “overly optimized” | Changed from “Recent Sitewide Searches” to “More Like This” and lowered the amount of links appearing on product pages and made sure that they are only relevant to the product category and/or family |

| 11/12/2014 | Updated homepage to remove overly optimized anchor text and made full-width | |

| 11/24/2014 | Rolled out a consistent site-wide top horizontal navigation bar | Previous navigation was blocked by Ajax and located in different divs depending on page type. New navigation was instituted to ensure a more consistent user experience and hierarchy. |

| 12/3/2014 | Rolled out New Parameter structure for Vehicle Selector | Previously, selecting a vehicle make/model/year would reload the same URL with filtered results. Since this was a global parameter (vehicle carries across) we added a parameter so when the vehicle is selected, the URL appends a ?year= parameter to the filtered results.

** We have yet to see Googlebot identify any of these parameters in WMT however we believe this could have been negated by the project below. |

| 12/3/2014 | Rolled out changes to Googlebot | Similar to above we saw via fetch and render that occasionally a vehicle would be selected for a page globally that had no products for a category (thus appearing blank). We instituted a change that would prevent Googlebot from storing any cookie or global vehicle when navigating to a new page. This was something that we felt would address a number of issues including Panda (pages with no content) and the loss of review snippets.

** This user experience enhancement is somewhat unique to the auto industry. We store the customer vehicle in the session to dynamically serve results relevant to their vehicle when they visit different category pages. |

| January 2015 | Bounce Rate Analysis Rewrites | We identified a number of content pages that have a high bounce rate, we’ll be auditing each piece of content to remove overly optimized anchor links and ensure content is valuable. |

| Upcoming | Remove Remaining Hidden AJAX from robots.txt | 2 more AJAX calls remain disallowed that are on click events (not on load) pulling in return policy information and reviews. |

| Upcoming | URL Structure Capitalization Fix | Currently certain URLs are dependent upon specific capitalization structure that won’t redirect to the correct page if mistyped. There is no duplication currently being indexed but this rewrite fix will ensure mistyped URLs can still be accessed. |

Let us know what you think!

So, we come to you the community for a fresh set of eyes, a new perspective. The team at Auto Accessories Garage, myself, and many many team members at Seer have been combing the site for months and have exhausted everything we can think of short of rewriting every page. Are we missing something huge here? Or did Google just plain get it wrong?